Think about asking your AI assistant to research a product defect. You snap a photograph and communicate your concern. The system reads the picture, hears your voice, and delivers an answer inside seconds. That’s Multimodal AI at work—expertise that processes info the way in which people naturally do.

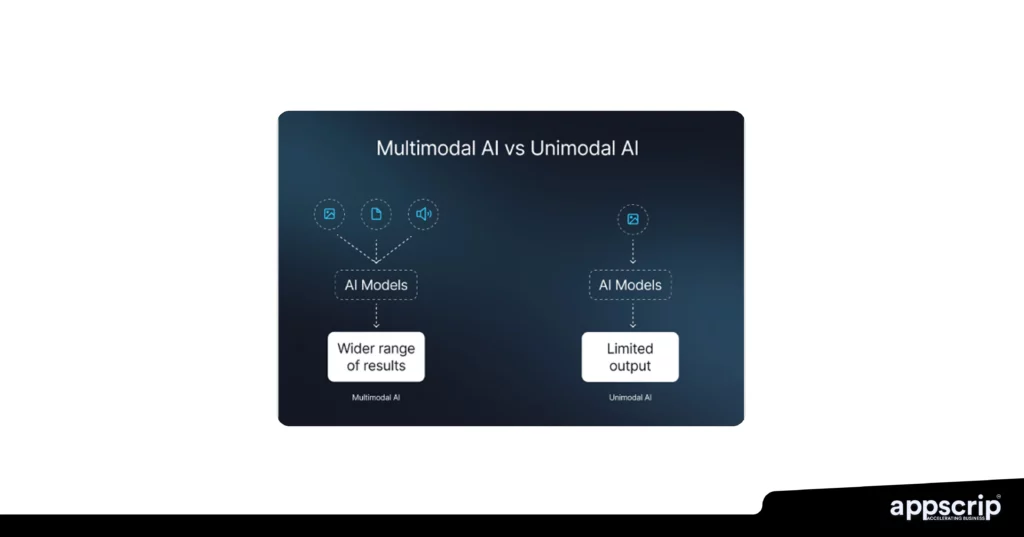

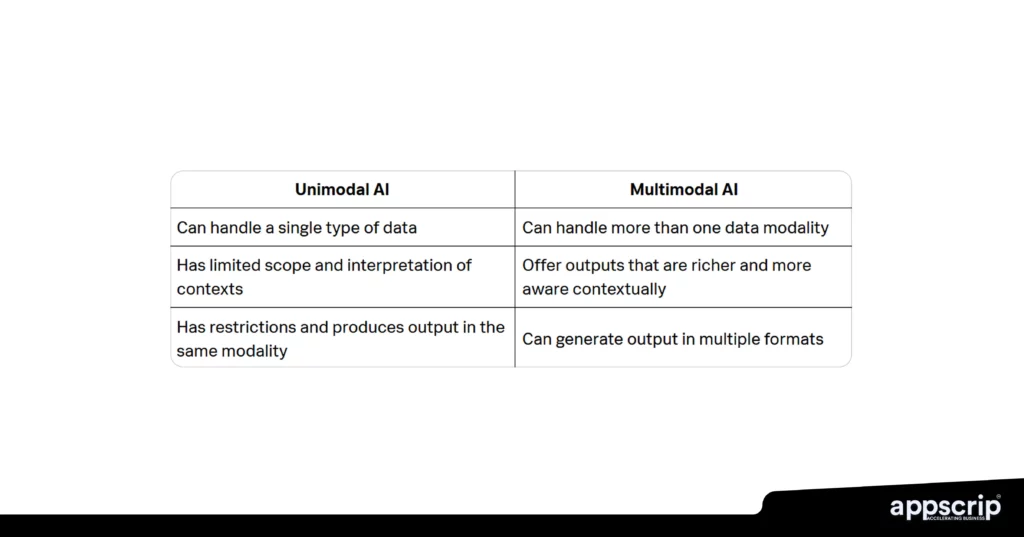

Conventional AI techniques deal with one information sort at a time. A chatbot reads textual content. A picture classifier analyzes images. A voice assistant processes speech. Multimodal AI breaks this limitation by processing textual content, photos, audio, and video concurrently, creating smarter techniques that perceive context the way in which folks do.

This expertise represents important infrastructure for staying aggressive. The worldwide multimodal AI market measurement was estimated $1.73 billion in 2024 and is projected to achieve $10.89 billion by 2030, rising at a CAGR of 36.8% from 2025 to 2030.

Corporations throughout healthcare, finance, retail, and logistics are deploying multimodal synthetic intelligence to resolve issues single-mode techniques can’t deal with, attaining sooner decision-making, improved buyer experiences, and operational effectivity that immediately impacts the underside line.

What Is Multimodal AI and Why Companies Want It

Multimodal AI refers to synthetic intelligence techniques able to processing and integrating a number of information sorts concurrently. Not like conventional AI that focuses on one format, multimodal fashions work with textual content, photos, audio, video, and sensor information collectively, creating techniques that comprehend info holistically relatively than in remoted fragments.

Take into consideration how people perceive the world. You mix what you see, hear, and skim to type full understanding. Multimodal AI fashions replicate this pure method, analyzing a buyer’s voice tone whereas studying their chat message and viewing their uploaded screenshot—creating complete understanding unattainable with single-modal techniques.

This integration occurs by means of subtle neural networks skilled on large datasets. Every information sort will get processed by specialised elements earlier than merging into unified understanding by means of fusion layers. Trendy implementations use superior architectures like transformers and a focus mechanisms, permitting fashions to weigh the significance of various inputs dynamically.

Core capabilities of Multimodal AI embody:

- Processing visible information like images, movies, and infographics alongside textual content descriptions

- Analyzing spoken language with written content material for full contextual understanding

- Combining sensor readings with textual experiences for predictive analytics functions

- Producing content material throughout a number of codecs from single prompts or instructions

- Cross-referencing totally different information sources to validate info accuracy

- Understanding complicated relationships between numerous enter sorts concurrently

- Adapting responses primarily based on essentially the most dependable modality for every scenario

The enterprise affect turns into clear in actual eventualities. A healthcare supplier analyzes affected person information, lab photos, and physician’s audio notes concurrently—recognizing correlations that remoted evaluation would miss.

An e-commerce platform examines product images, buyer evaluations, and video demonstrations to personalize suggestions with unprecedented precision. A producing system displays meeting line cameras whereas processing sensor information and upkeep logs to foretell tools failures earlier than they happen.

| Function | Conventional AI | Multimodal AI |

| Information Enter Varieties | Single modality solely | A number of modalities concurrently |

| Context Understanding | Restricted to at least one format | Complete throughout codecs |

| Choice Accuracy | Based mostly on partial info | Enhanced by means of information fusion |

| Use Case Flexibility | Slender, specialised duties | Extensive-ranging complicated eventualities |

| Human Interplay | Primary one-dimensional | Pure multi-sensory |

| Studying Strategy | Format-specific coaching | Cross-modal relationship studying |

Why does this matter now? Buyer expectations have developed dramatically. Folks need to talk naturally—sending voice messages, images, and textual content interchangeably. Companies want techniques that deal with this mixed-media actuality with out forcing clients into synthetic communication constraints. Multimodal AI delivers that seamless expertise.

The expertise additionally addresses crucial information fragmentation challenges. Most corporations retailer info in numerous codecs throughout totally different techniques, creating remoted information islands that forestall complete evaluation. Multimodal generative AI connects these fragments, discovering patterns and insights human analysts miss.

How Multimodal AI Expertise Works

Understanding how Multimodal AI operates requires three important processes: information encoding, fusion mechanisms, and unified processing.

Information encoding kinds the muse. Every modality wants translation into codecs AI fashions perceive. Textual content converts into numerical vectors by means of embedding fashions that seize semantic which means. Photographs remodel into pixel arrays or function representations capturing edges, textures, and objects. Audio turns into spectrograms exhibiting frequency distributions over time.

The true innovation lies in fusion mechanisms—how techniques mix encoded inputs intelligently. Three main approaches exist:

Early fusion merges uncooked information earlier than processing, permitting fashions to be taught relationships from the bottom up. This works nicely when modalities carefully relate, like combining audio and video for lip-reading functions.

Late fusion processes every modality individually by means of specialised networks, then combines outcomes at determination time. This method excels when modalities contribute independently—analyzing product evaluations and product photos individually, then merging insights for suggestions.

Hybrid fusion, essentially the most subtle method, integrates info at a number of levels all through the pipeline. Trendy multimodal fashions more and more undertake hybrid approaches, optimizing fusion methods for particular duties.

The processing workflow sometimes follows these steps:

- Uncooked information assortment from cameras, microphones, textual content inputs, sensors, and databases

- Pre-processing and normalization to standardize codecs and take away noise artifacts

- Function extraction utilizing specialised encoders tailor-made for every modality’s traits

- Cross-modal alignment figuring out temporal and semantic relationships between totally different inputs

- Fusion layer integration the place modalities mix by means of realized consideration mechanisms

- Activity-specific processing for classification, technology, prediction, or reasoning duties

- Output technology within the requested format, probably spanning a number of modalities

| Processing Stage | Perform | Instance |

| Enter Assortment | Collect numerous information | Buyer submits photograph plus voice message describing product subject |

| Encoding | Convert to numerical format | Picture to 512-dimensional vectors, audio to mel-frequency arrays |

| Function Extraction | Determine key traits | Object detection finds broken part, speech evaluation detects frustration |

| Fusion | Mix modality insights | System hyperlinks visible injury severity with emotional urgency from voice |

| Evaluation | Course of unified illustration | Decide subject precedence requires instant consideration primarily based on mixed indicators |

| Output | Ship response | Textual content directions plus annotated picture highlighting downside space |

Trendy multimodal fashions leverage transformer architectures utilizing consideration mechanisms that permit fashions to concentrate on related connections between totally different enter sorts. When processing a buyer help picture and textual content description, consideration helps the mannequin hyperlink the phrase “scratch” in textual content to precise scratch marks seen within the photograph.

Cloud infrastructure makes this accessible with out large capital funding. Platforms like AWS, Google Cloud, and Microsoft Azure ship multimodal companies by means of unified APIs, enabling companies to deploy subtle capabilities with out constructing infrastructure from scratch.

Actual-World Purposes Throughout Industries

Multimodal AI actively reshapes how industries function at present.

Healthcare

Healthcare diagnostics represents one of the vital impactful functions. Multimodal AI synthesizes medical photos, affected person histories, lab outcomes, and scientific notes concurrently. A most cancers screening system analyzes mammogram photos, affected person medical historical past, genetic markers from lab outcomes, and radiologist voice notes collectively. The fusion reveals danger components invisible to single-modal evaluation.

Finance

Monetary companies deploy Multimodal AI for classy fraud detection and danger evaluation. When somebody applies for a mortgage, techniques overview scanned paperwork (checking for forgery indicators), written utility responses (assessing consistency), voice stress patterns throughout verification calls (detecting attainable deception), and typing dynamics (revealing urgency or hesitation). Fraudulent functions usually reveal inconsistencies throughout modalities.

Ecommerce

E-commerce personalization reaches unprecedented sophistication. When consumers add images asking “discover me one thing like this,” techniques perceive visible preferences, learn textual content queries, and match in opposition to stock. A buyer uploads a gown photograph with textual content “one thing related however extra informal.” The system extracts visible options, interprets “informal” to switch styling, and weights earlier buy historical past and searching conduct to ship exactly focused suggestions.

| Trade | Software | Modalities Used | Enterprise Final result |

| Healthcare | Diagnostic Evaluation | Medical photos, affected person information, lab information, audio notes | 23% sooner prognosis, 15% higher accuracy |

| Retail | Visible Search & Suggestions | Product images, evaluations, movies, searching information | 35% conversion improve, 25% fewer returns |

| Finance | Fraud Detection Techniques | Transaction information, paperwork, voice calls, biometrics | 50% higher detection, 40% fewer false positives |

| Manufacturing | High quality Management Automation | Visible inspection, sensors, upkeep logs, audio | 28% defect discount, 30% downtime lower |

| Automotive | Autonomous Driving | Cameras, LIDAR, radar, GPS, climate information | 40% navigation error discount, safer selections |

Buyer Assist

Buyer help transforms when brokers leverage multimodal instruments. Clients ship screenshots, describe points verbally, connect error logs, and chat concurrently. AI assistants course of all inputs to grasp issues comprehensively. Decision instances drop 40-50% as a result of complete context arrives instantly, eliminating information-gathering phases.

Manufacturing

Manufacturing high quality management combines visible inspection with sensor information and upkeep information. Meeting line cameras seize product photos whereas temperature sensors monitor tools warmth and vibration displays detect irregular operation. This mix permits exact predictive upkeep.

A system may discover paint utility trending towards uneven whereas temperature sensors present hotter operation and upkeep logs reveal a just lately changed heating factor—connecting indicators to foretell and forestall points earlier than defects happen.

Enterprise Advantages and Implementation Technique

The enterprise case for Multimodal AI extends past technological sophistication. Corporations implementing multimodal techniques report measurable enhancements throughout key efficiency indicators.

Operational effectivity beneficial properties seem rapidly. Buyer help groups resolve points 40% sooner when AI analyzes voice calls, chat logs, and screenshots concurrently. Common deal with time drops from 18 minutes to 11 minutes per help ticket. First-contact decision charges improve from 67% to 89%.

Income development stems from enhanced buyer experiences. E-commerce platforms utilizing multimodal product suggestions see conversion charges improve 30-45%. A trend retailer experiences common order worth will increase 22%, cart abandonment drops 18%, and return charges lower 24%—delivering 38% income development from current visitors.

Monetary companies report fraud detection enhancements exceeding 50%. A bank card processor improves from 67% to 91% detection whereas reducing false positives from 12% to 7%. Fraud losses lower $12 million yearly whereas investigation prices drop $3 million.

The quantifiable enterprise advantages embody:

- Diminished buyer help prices by means of sooner decision (40% effectivity achieve)

- Elevated buyer lifetime worth from improved personalization (22-35% improve)

- Decrease operational bills from predictive upkeep (30-40% downtime discount)

- Enhanced decision-making velocity consolidating information sources (50% sooner evaluation)

- Aggressive differentiation by means of superior experiences (measured in market share beneficial properties)

- Threat mitigation through complete fraud detection (50%+ enchancment in detection)

- Larger income per buyer from higher suggestions (20-30% AOV improve)

Implementation challenges require strategic approaches. Information high quality and availability type the primary main impediment. Multimodal fashions require aligned datasets pairing totally different codecs. Infrastructure necessities exceed conventional AI deployments considerably—processing video, audio, and pictures concurrently calls for substantial computing energy.

Moral issues multiply with multimodal techniques. Facial recognition mixed with voice evaluation raises privateness issues. Techniques making selections primarily based on voice tone may introduce bias—accents or speech patterns may set off unfair outcomes. Accountable deployment requires establishing governance frameworks.

Strategic implementation approaches embody:

- Beginning with targeted pilot initiatives demonstrating clear ROI earlier than enterprise rollout

- Selecting use instances the place multimodal benefits exceed conventional approaches considerably (40%+ enchancment targets)

- Constructing on current AI investments relatively than changing them fully

- Establishing information governance frameworks guaranteeing high quality and compliance earlier than assortment

- Partnering with platform suppliers providing pre-trained multimodal fashions (lowering improvement time 60-70%)

- Creating suggestions loops repeatedly bettering efficiency by means of manufacturing information

- Investing in change administration guaranteeing customers perceive and embrace new capabilities

The trail ahead includes balancing ambition with pragmatism. Multimodal AI provides transformative capabilities however calls for vital funding. Corporations ought to assess readiness by means of functionality audits analyzing current information high quality, technical infrastructure, organizational abilities, and cultural readiness. Begin with high-impact, low-complexity pilots demonstrating worth rapidly.